Cloud Computing for the Modern Mind

The idea that remote servers on the Internet could be used to store, manage, and process data instead of on a local server or machine - better known as 'cloud computing' - has revolutionized the software development industry. How did this technology become indispensable in just two decades?

The idea that remote servers on the Internet could be used to store, manage, and process data instead of on a local server or machine - better known as 'cloud computing' - has revolutionized the software development industry. How did this technology become indispensable in just two decades?

For one, its trajectory correlated with the rise of the Internet and the influx of millions of new users from every corner of the planet. People communicated and worked faster than ever before, which rapidly accelerated the speed of societal change that companies had to scramble to adapt to. Modern computing problems became increasingly complicated and required more resources to solve than most teams had access to.

Cloud computing offered solutions to all of that. With resources being scalable and available on-demand, resilient systems could be built at less cost. Less busy work and infrastructure wrangling meant developers got to production in a fraction of the time. Most importantly, an increasing number of features simply could not be implemented without the cloud. Even with infinite engineering power and money, there are fundamental limitations to what can be accomplished without access to external resources.

Even with infinite engineering power and money, there are fundamental limitations to what can be accomplished without access to external resources.

The rise of cloud computing indicates to me that the industry had accepted an idea that was once anathema: at some point, we can't handle everything ourselves and must learn how to intentionally integrate with external resources. That same observation holds true for another type of information system; one that has also been disrupted by the rise of the Internet, the rapid pace of modern life, and the emergence of increasingly difficult problems to solve.

I am talking, of course, about the brain.

Human Brains in the Information Age

The average person today has much to pay attention to. In a single day, each one of us adapts to more change, reacts to more stimulus, and processes more information than a medieval peasant would in an entire month.

Globalization connects our world more so than ever before. While there is much benefit, it also means far more stimulus clamoring for our limited attention. Much of that is noise rather than anything important or actionable. Case in point - it is not uncommon for us to get our day ruined by a frustrating opinion held by a stranger who lives halfway around the world and whom we will never meet.

The amount of executive function available to us out-of-the-box is laughably outmatched by the amount of information that our modern world throws our way. We struggle to prioritize tasks, process new events as they happen, and learn from past experiences. There are plenty of factor, but the most common reason is simply: there is too much going on, all the time.

We have adapted to that by becoming cyborgs. We extend our abilities with computers that offer us access to the largest repository of knowledge in history. Our phones provide us near instantaneous communication with anyone on the planet. We have built computer systems that solve problems in hours that would have taken a team of scientists a few hundred years of dedicated effort. We train deep learning models on the sum total of human knowledge and power them with nuclear energy so they can help us write work emails and cure cancer.

We have integrated many of the technological advances over the past few decades into our bodies and minds. Forced separation from them feels as painful and disturbing as amputating a limb. (Or two, for software devs.) The act disconnects us from the majority of our knowledge, isolates us from the forum where most modern communication occurs, and stunt our ability to create.

I do not mean to say that technology has made us reliant and weak. We have always been reliant and weak, and that is why we build technology. The answer is not to eschew it but to learn to use it without being controlled by it. (We are definitely still working on that.) There is no getting around the fact that our brains - or "local machines" - are hopelessly outmatched against the requirements of the modern world. Why wouldn't they be, when we are working with legacy hardware that date back from millions of years ago?

There is no getting around the fact that our brains - or "local machines" - are hopelessly outmatched against the requirements of the modern world.

Not enough time has passed since the early days of humanity for our gray matter to evolve significantly. Our brains are still fundamentally that of hunter-gatherer animals. We are subject to the whims of biochemicals and survival instincts that once helped us survive bear attacks, but now give us random anxiety attacks in the office bathroom.

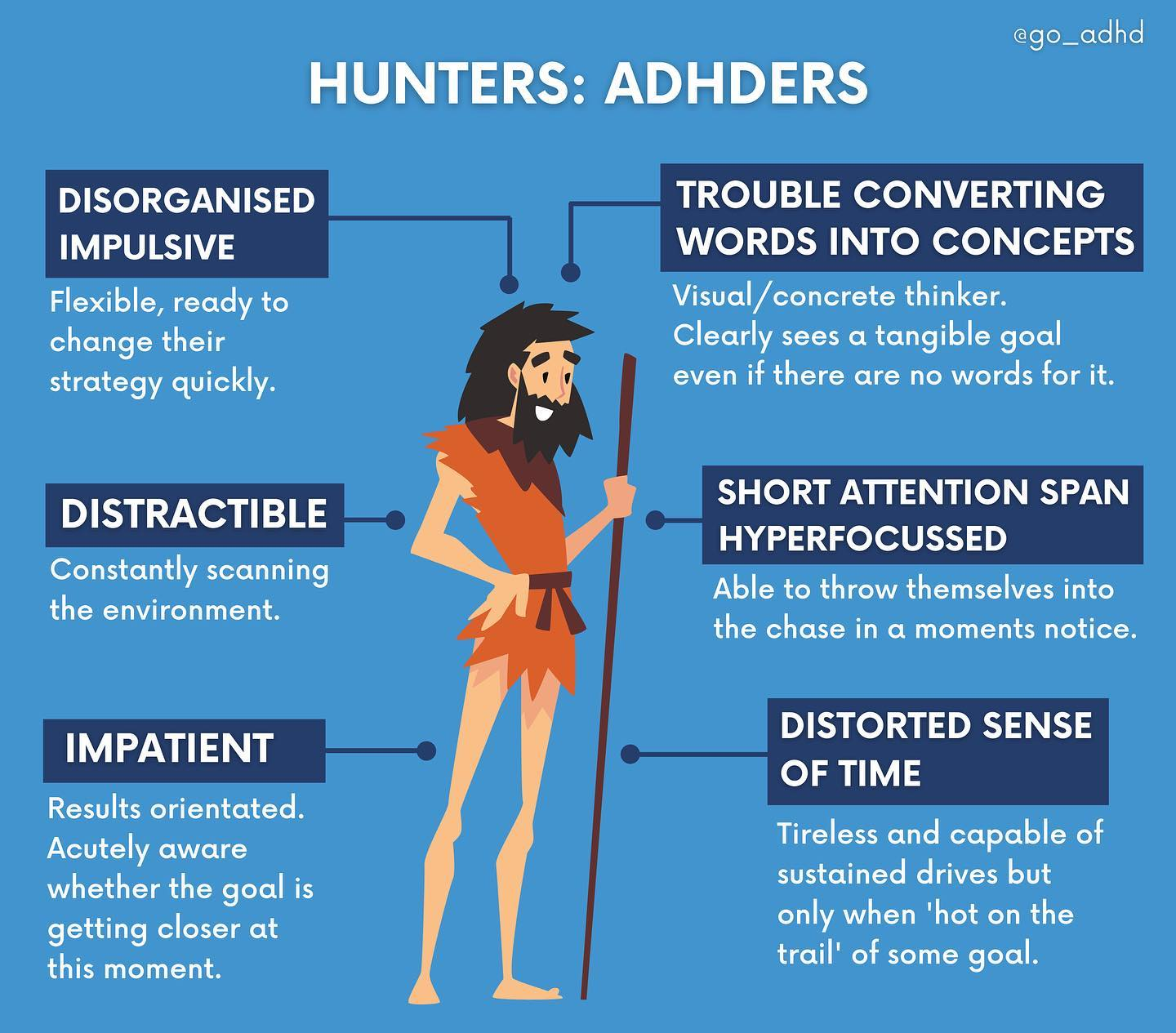

An example of this disconnect is the existence of attention-deficit hyperactive disorder, or ADHD. Despite the name, I see it less as a disorder than a niche operating system - albeit one that struggles with the amount of consistent attention that must be maintained to succeed in our modern world. This dynamic has not always been the case.

Case Study: ADHD and the Hunter vs. Farmer Hypothesis

Theories around evolutionary psychology are notoriously difficult to test given our inability to time travel or run controlled experiments that span millions of years. However, dozens of studies have shown that individuals with ADHD characteristics had skills that were advantageous during the hunter-gatherer age that became maladaptive in the aftermath of the agricultural revolution.

Supporting evidence include the finding that ADHD alleles had enjoyed selective advantage in the past but have been decreasing over the past 35,000 years. In addition, modern nomadic tribes have high rates of genetic mutations linked to ADHD, which implies that the lifestyle selects for those characteristics and that tribes in the distant past likely had a similar distribution.

These collectively form the "hunter versus farmer" hypothesis. A few key ideas from this theory that I resonated with:

- Individuals with ADHD traits excelled as hunters due to their ability to "hyperfocus" - in other words, to postpone eating, sleeping and other personal needs in order to stay absorbed in the "urgent task" (usually last-minute projects or preparations) for an extended time.

- They are motivated by survival and aggressive competition, which is helped by traits like hyperactivity and impulsivity.

- They excel at achieving clear, tangible goals that reward them with quick dopamine hits, but flounder when presented with ambiguity and long timeframes.

(Note that a lot of these traits are actually pretty helpful for working in tech. There is surprising similarity between the skills we need to pass our performance reviews and and those we need to chase down buffalo in the prairie. We are part of an industry that rewards moving fast, emphasizes adapting to constant change, and glamorizes the ability to hyperfocus on a single problem for dozens of hours. A reason why there are so many people with ADHD who end up in tech?)

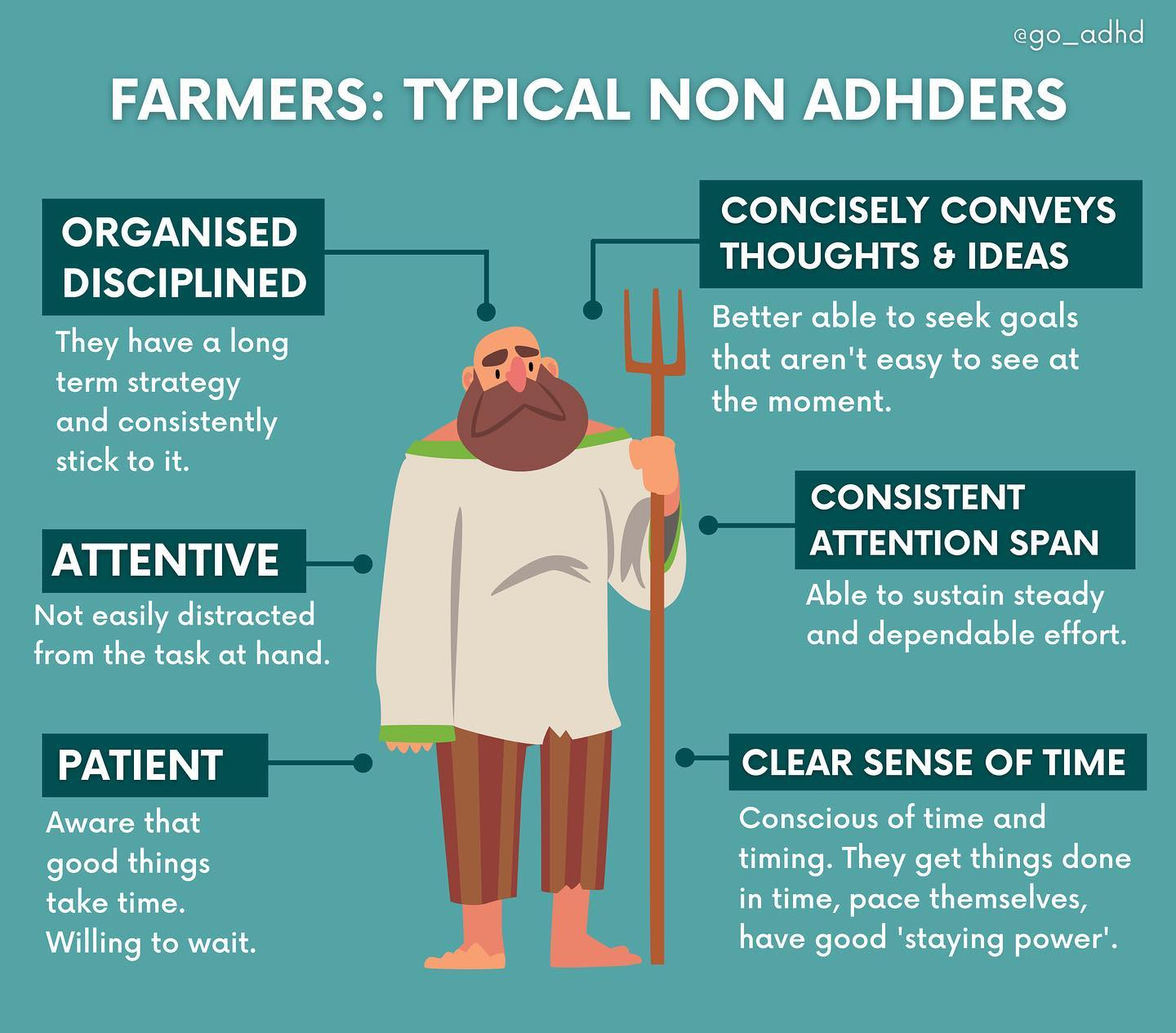

The agricultural revolution meant a need for a different set of skills, one that emphasizes long term success instead of short term survival. Those traits are the exact opposite of what was needed to succeed as a hunter and is generally more suited to meeting the needs of modern life.

(Different types of brains, or operating systems, are more suited to certain purposes than others. That doesn't make any of them inferior! You probably wouldn't buy a Mac as your main gaming PC, for example, but that doesn't mean that iOS is a disorder while Windows is the paragon of health.)

Unlike the majority of human history, however, people today have to play many different roles at once. They must be both 'hunters' and 'farmers' - able to adapt quickly but also stick to a longterm strategy, hyperfocus but also be able to pace themselves, pay attention to constant emerging trends while maintaining focus on the task at hand. 'Hunters' struggle with the needs of modern life, but so do many of the 'Farmers' - just in different ways.

Modern farmers spend far more time on fast-paced efforts like business planning, marketing, and navigating government regulations than tilling their crops. Everything is connected to everything in today's world. Unless you are extraordinarily fortunate, you will have to do things that don't come easily to you in order to survive.

Better Living through Cloud Computing

How do we close the gap between the functionality of our brains and the wide range of needs in the world that we live in? In my opinion, the answer lies in the conclusion reached by software developers in the past two decades - at some point, we can't handle everything ourselves and must learn how to integrate with external resources with intention.

I want to emphasize one word - intentionally. Because we have all drawn from "external resources" at some point in our lives, whether it was StackOverflow or TikTok. Many of us have tools both digital and analog - calendars, to-do lists, notebooks - to save information externally so that it can be accessed later, instead of relying on the ephemeral nature of our memory.

Those methods are rarely up to the task, however; we start forgetting to add things to the calendar, we give up on our to-do lists when they get too long, and we take notes that we never look at again. We try out frameworks and tools when we hear about them, and then drop them when they don't work perfectly out-of-the-box. Instead of a clear pipeline between our brains and the external resources available to us, we default to a handful of scattered, duct-tape solutions that strain under load.

Instead of a clear pipeline between our brains and the external resources available to us, we default to a handful of scattered, duct-tape solutions that strain under load.

We should design the way we integrate with external resources with just as much intention, precision, and effort as we do the architectures of our cloud applications. We need to test and iterate with the same discipline as we do with software. We have to build solutions that are "cloud-native" - resilient, scalable, fast, and efficient from the start. We need to identify and reduce the suboptimal ways in which we integrate with external resources. By doing so, we can transcend the limitations of our millennia-old legacy hardware to prevail against the challenges of modern life. (Or at least, put up a better fight.)

We should implement cloud computing for our brains. In my next post, I'll talk about how. I'll discuss the key parts of a brain-cloud architecture, how to implement each part, troubleshooting, and much more.

See you next time!

As always, if you have thoughts, do leave a comment.

(If you're not seeing the option, you'll need to sign up as a subscriber. It's free!)